Computer Science Week

Diving into interactive and immersive computing

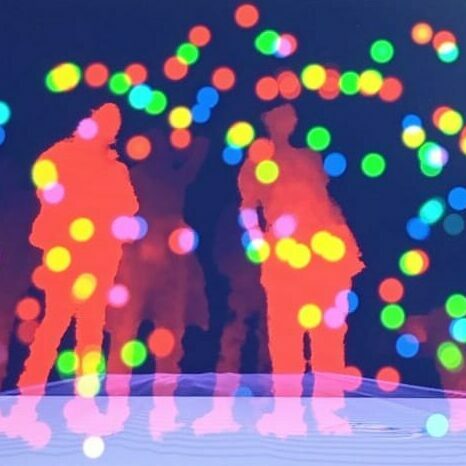

The “Virtual Ball Pit” was an immersive, hands-on experience designed to engage students in the world of interactive software and sensor-based technology. Hosted in the Immersive Visualization Room, it combined Kinect motion tracking, sound visualization, and webcam-based interaction to create an engaging, real-time experience.

Exploring the Virtual Ball Pit

As part of the Computer Science Day, students were invited to experience a cutting-edge interactive installation in the Immersive Visualization Room. The activity, built using a combination of VVVV and Python, placed participants in the middle of a dynamic virtual ball pit. Using Kinect technology, the installation detected their silhouettes and enabled real-time interactions with the virtual environment. The experience provided a hands-on introduction to sensor integration, real-time data processing, and the development of immersive, interactive systems.

Tracking Presence, Visualizing Sound

At the heart of the experience was the Kinect sensor, which tracked the participants’ movements, calculating collisions with the virtual balls in the pit. Simultaneously, the room’s integrated microphone captured ambient sounds, translating them into visual waveforms displayed on the screen via FFT processing. This created a multisensory environment that highlighted the relationship between sound and movement. Additionally, a ceiling-mounted webcam tracked people’s movements in the space, translating their X,Y positions into “water perturbations” on the screen, adding an extra layer of interactivity.

A Hands-On Learning Experience

The goal of this installation was to immerse students in the process of building interactive software and systems. Through exploring real-time sensor data, collision detection, and live sound analysis, participants gained insight into the complexities of software development, sensor integration, and the creative possibilities that arise when combining diverse technologies. The activity also offered a behind-the-scenes look at the tools and techniques used to design and deploy such an installation, sparking curiosity and excitement for the future of interactive computing.